One of the biggest problems facing artificial intelligence (AI) models is the fact that some unexpected events occur during their training. Recently, we saw Gemini, Google's AI model, have some racist behaviors. But after all the scandal that happened, Google came out to explain what went wrong…

After the controversy surrounding Google I apologize In a post on X he later provided a more detailed explanation of Gemini's errors in his writing Blog.

Google's explanations for Gemini's excessive listing

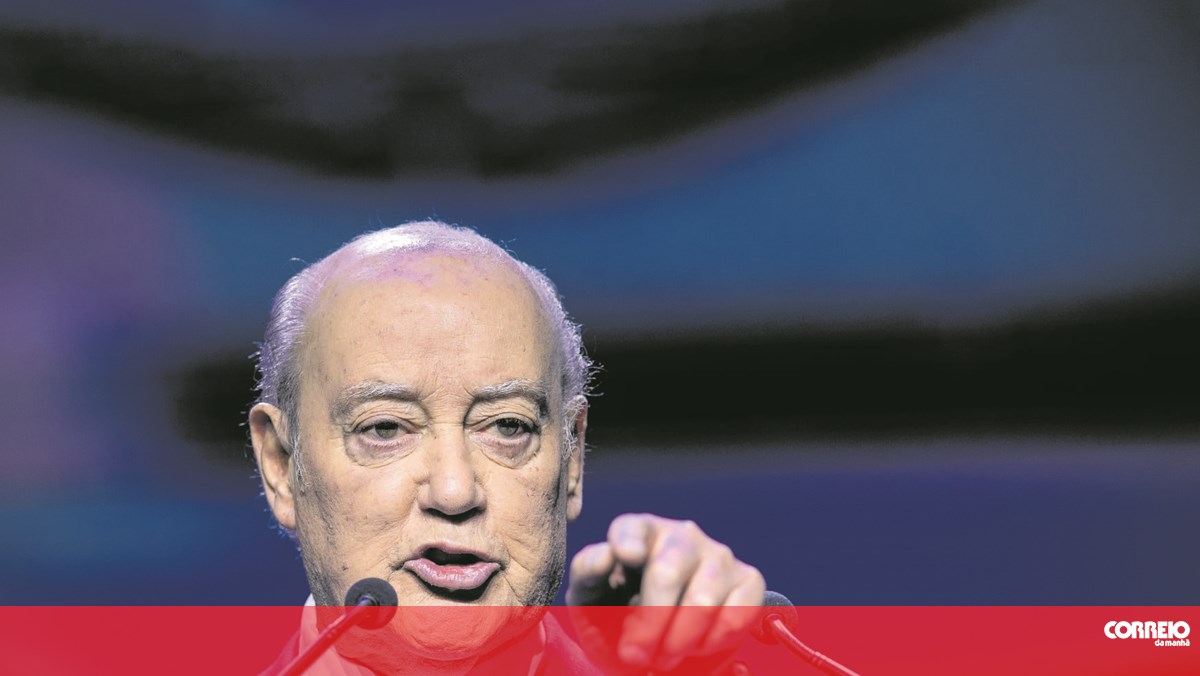

Prabhakar Raghavan, Google's senior vice president of search, assistant, ads, geomapping and related products, begins the post by acknowledging the mistake and apologizing. Google says it "doesn't want Gemini to refuse to photograph any particular group" or "create images that are historical or otherwise inaccurate." What could actually be two opposing concepts.

So it shows that if you make a request to Gemini Request photos of a specific type of personsuch as “a black teacher in the classroom, a white vet with a dog, or people in a particular cultural or historical context, should get an answer that reflects exactly what they are asking.”

Mountain View claims to have identified two issues responsible for what happened. More specifically:

- “Our adjustment to ensure that Gemini showed a range of people did not take into account cases where it clearly should not have shown a range.”

- “Over time, the model became more cautious than we intended, refused to respond to certain requests, and misinterpreted some very simple requests as sensitive.”

As a result, Gemini's imaging tool, which works with Image 2, "overcompensated in some cases and was too conservative in others."

As mentioned previously, Google promised to work on new improvements Before the functionality is reactivated for people through “extensive testing”.

In the post, Raghavan makes a clear distinction between Gemini and his own search engine, and strongly recommends that you trust Google Search, as he explains that "its separate systems surface new, high-quality information" on the subject, With fonts from all over the web.

The statement ends with a warning: They can't promise this won't happen again, It opens the door to more inaccurate, offensive and misleading results. However, they will continue to take the necessary measures to correct this situation. Finally, it highlights the potential of AI as an emerging technology with many possibilities.

Read also:

“Friendly zombie fanatic. Analyst. Coffee buff. Professional music specialist. Communicator.”